I got interested in very cool project I found in github which was originally created by Microsoft and now is maintained in this repo https://github.com/Bertverbeek4PS/bc2adls

What this project is is a framework and Business Central app to load data from Business Central into Azure Datalake and then transform the data and analyse it.

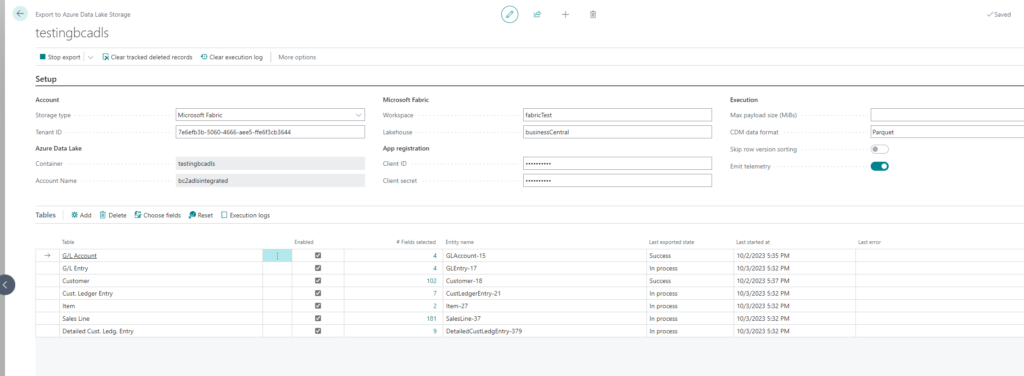

BC2ADLS allows you to choose which tables and columns you need and will take care of incremental loading so you will only get the changed data. It also takes care of multicompany and deleted records.

First you start off by creating the BC2ADLS setup in Business Central and also do some Azure Setup for the Azure Blob Storage where the initial CSV’s will be loaded.

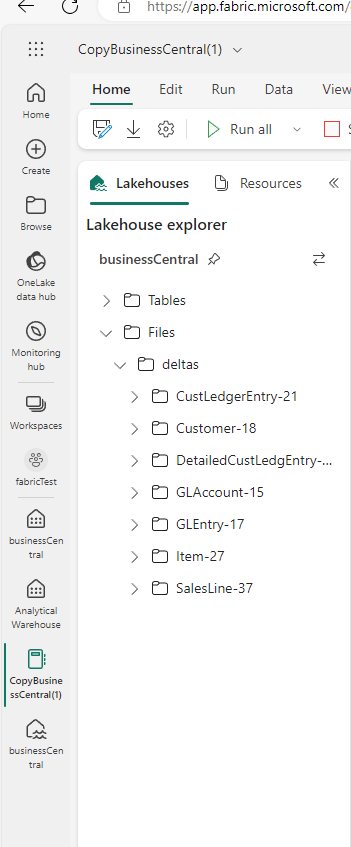

After the setup is done you can push your data into the Fabric data lake.

They will end up in the Lakehouse as csv files

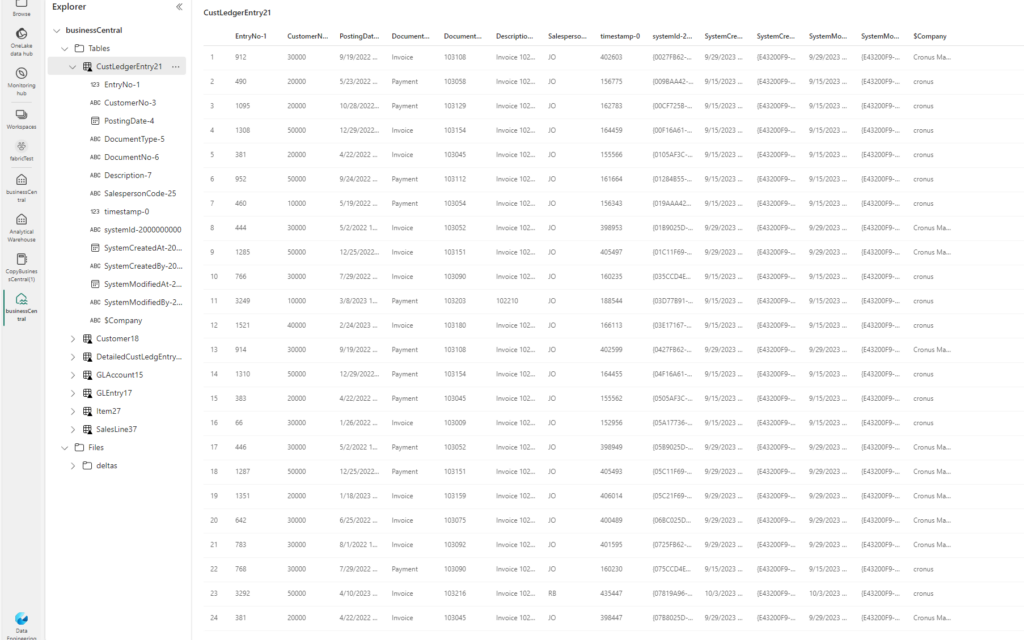

Now you can run Jyper Notebook to actually transform the CSV’s, clean the data etc and load them into Datalake tables.

Now you basically have the raw data from Business Central and you can start creating the analytics off them.

I created a new Datawarehouse in Fabric and then run off the queries against the Lakehouse.

For example I can find out the sales per company and per sales person:

Drop table if EXISTS SalesPerSalesperson;

Create table SalesPerSalesperson

AS (

SELECT

ledgentry.[$Company] as Company, ledgentry.[SalespersonCode-25] as SalesPerson, sum(detailledgentry.[Amount-7]) as Total

FROM businessCentral.dbo.[DetailedCustLedgEntry379] detailledgentry

left outer join businessCentral.dbo.[CustLedgerEntry21] ledgentry

ON detailledgentry.[CustLedgerEntryNo-2] = ledgentry.[EntryNo-1]

group by ledgentry.[$Company], ledgentry.[SalespersonCode-25]

)

And now I can consume the warehouse in PowerBI or Excel.

So what are my thoughts in this BC2ADLS project + Microsoft Fabric?

I think its pretty great. Its pretty easy to get cloud scale datawarehouse running with incremental loads for Business Central going this route. Fabric itself is just a wrapper for old technologies and there’s not much to learn there.